Top 10 Best Web Scraping Tools in 2023. 2023 Top 10 Best Web Scraping Tools for Data Extraction | Web Scraping Tool | ScrapeStorm

- Top 10 Best Web Scraping Tools in 2023. 2023 Top 10 Best Web Scraping Tools for Data Extraction | Web Scraping Tool | ScrapeStorm

- Scraping Bot. What Is a Scraper Bot

- Ai Web Crawler. Overview of Web Crawler Tools

- Web Scraping online. Лучшие сервисы для веб скрапинга данных: топ-7

- How to get Data from website. 16 Tools to extract Data from website

Top 10 Best Web Scraping Tools in 2023. 2023 Top 10 Best Web Scraping Tools for Data Extraction | Web Scraping Tool | ScrapeStorm

330 views

Abstract: This article will introduce the top10 best web scraping tools in 2023. ScrapeStorm Free Download

Web scraping tools are designed to grab the information needed on the website. Such tools can save a lot of time for data extraction.

Here is a list of 10 recommended tools with better functionality and effectiveness.

1. ScrapeStorm

ScrapeStorm is an AI-Powered visual web scraping tool,which can be used to extract data from almost any websites without writing any code.

It is powerful and very easy to use. You only need to enter the URLs, it can intelligently identify the content and next page button, no complicated configuration, one-click scraping.

ScrapeStorm is a desktop app available for Windows, Mac, and Linux users. You can download the results in various formats including Excel, HTML, Txt and CSV. Moreover, you can export data to databases and websites.

Features:

1) Intelligent identification

2) IP Rotation and Verification Code Identification

3) Data Processing and Deduplication

4) File Download

5) Scheduled task

6) Automatic Export

8) Automatic Identification of E-commerce SKU and big images

Pros:

1) Easy to use

2) Fair price

3) Visual point and click operation

4) All systems supported

Cons:

No cloud services

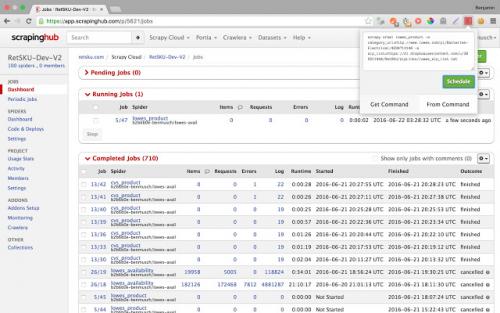

2.ScrapingHub

Scrapinghub is the developer-focused web scraping platform to offer several useful services to extract structured information from the Internet.

Scrapinghub has four major tools – Scrapy Cloud, Portia, Crawlera, and Splash.

Features:

1) Allows you to converts the entire web page into organized content

2) JS on-page support toggle

3) Handling Captchas

Pros:

1) Offer a collection of IP addresses covered more than 50 countries which is a solution for IP ban problems

2) The temporal charts were very useful

3) Handling login forms

4) The free plan retains extracted data in cloud for 7 days

Cons:

1) No Refunds

2) Not easy to use and needs to add many extensive add-ons

3) Can not process heavy sets of data

3.Dexi.io

Web Scraping & intelligent automation tool for professionals. Dexi.io is the most developed web scraping tool which enables businesses to extract and transform data from any web source through with leading automation and intelligent mining technology.

Dexi.io allows you to scrape or interact with data from any website with human precision. Advanced feature and APIs helps you transform and combine data into powerfull datasets or solutions.

Features:

1) Provide several integrations out of the box

2) Automatically de-duplicate data before sending it to your own systems.

3) Provide the tools when robots fail

Pros:

1) No coding required

2) Agents creation services available

Cons:

1) Difficult for non-developers

2) Trouble in Robot Debugging

4.Diffbot

https://www.youtube.com/embed/qH9VYKxU1NI

Diffbot allows you to get various type of useful data from the web without the hassle. You don’t need to pay the expense of costly web scraping or doing manual research. The tool will enable you to exact structured data from any URL with AI extractors.

Scraping Bot. What Is a Scraper Bot

Scraper bots are tools or pieces of code used to extract data from web pages. These bots are like tiny spiders that run through different web pages in a website to extract the specific data they were created to get.

The process of extracting data with a scraper bot is called web scraping . At the final stage of web scraping, the scraper bot exports the extracted data in the desired format (e.g JSON, Excel, XML, HTML, etc.) of the user.

As simple as this process might sound, there are a few web scraping challenges , and you can face that could hinder you from getting the data you want.

The practical uses of scraping bots

Scraper bots help people retrieve small-scale data from multiple websites. With these data, online directories like Job boards, Sports websites, and Real estate websites can be built. Aside from these, so much more can still be done with a scraper bot. Some of the popular practical uses we see include:

Market Research: Many online retailers rely on web scraping bots to help them understand their competitors and overall market dynamics. That way, they can develop strategies that will help them stay ahead of the competition.

Stock Market Analysis: For stock traders to predict the market, they need data and many of them get that data with web scraping. Stock price prediction and stock market sentiment analysis with web scraping is becoming a trending topic. If you are a stock trader, this is something you have to know about.

Search Engine Optimization (SEO): SEO companies rely heavily on web scraping for many things. First, in order to monitor the competitive position of their customers or their indexing status, web scraping is needed. Also, to find the right keywords for content, a scraper bot is used. With web scraping, there are so many actionable SEO hacks that can be implemented to optimize a web page.

Ai Web Crawler. Overview of Web Crawler Tools

General Purpose Web Crawlers

- 80Legs : Cloud-based tool –

– Best Online Web Crawler - Sequentum : Cloud-based tool –

– Premium Web Crawler for Enterprises - OpenSearchServer : Desktop-based tool – – Open-Source Crawler for Enterprise

- Apache Nutch : Desktop-based tool –

– Supports Customization and Extensions - StormCrawler : Desktop-based tool –

– Best SDK for Low-Latency Web Crawlers

Specialized Web Crawlers

- ScrapeBox : Desktop-based Software –

– Best for Search Engine Crawling - ScreamingFrog : Desktop-based Software –

– Best for onsite SEO Crawling - AtomPark Email Extractor : Desktop-based Software –

- ParseHub : Desktop-based tool –

– Best for Crawling news sites - HTTrack : Desktop-based Software –

– Best for Downloading Website for Offline Usage

Web crawlers are an important tool on the Internet today that imagining a world without them will make the Internet a different world to navigate. Web crawlers power search engines; are the brains behind web archives, help content creators find out their copy-righted content, and help website owners know which page on their site needs attention.

In fact, there’s a whole lot you can do with web crawlers that, without them, doing so will become practically impossible. As a marketer, you might have a need to make use of web crawlers at some point, especially if you need to collect data around the Internet. However, finding the right web crawler for your tasks can be difficult.

This is because, unlike web scrapers, that you can find many general-purpose web scrapers; you will need to dig deeper to be able to find web crawlers for your own use. This is because most of the popular web crawlers are usually specialized.

In this article, we will be highlighting some of the top web crawlers in the market that you can make use of to scrape data from the Internet. It might interest you to know that there are a good number of them that you can make use of for crawling websites on the Internet.

Web Scraping online. Лучшие сервисы для веб скрапинга данных: топ-7

Рассказываем, что такое веб скрапинг, как применяют данные полученные этим способом, и какие сервисы для веб скрапинга существуют на рынке.

В октябре 2020 года Facebook подал жалобу в федеральный суд США против двух компаний, обвиняемых в использовании двух вредоносных расширений для браузера Chrome. Эти расширения позволяют выполнять скрапинг данных без авторизации в Facebook, Instagram, Twitter, LinkedIn, YouTube и Amazon.

Оба расширения собирали публичные и непубличные данные пользователей. Компании продавали эти данные, которые затем использовались для маркетинговой разведки.

В этой статье мы разберемся, как выполнять скрапинг данных легально, и расскажем про семь сервисов для веб скрапинга, которые не требуют написания кода. Если вы хотите выполнять скрапинг самостоятельно, прочитайтеинструментов и библиотек для скрапинга.

Что такое скрапинг данных?

Скрапинг данных или веб скрапинг – это способ извлечения информации с сайта или приложения (в понятном человеку виде) и сохранение её в таблицу или файл.

Это не нелегальная техника, однако способы использования этих данных могут быть незаконными. В следующем

Как используют эти данные

Веб скрапинг имеет широкий спектр применений. Например, маркетологи пользуются им для оптимизации процессов.

1. Отслеживание цен

Собирая информацию о товарах и их ценах на Amazon и других платформах, вы можете следить за вашими конкурентами и адаптировать свою ценовую политику.

2. Рыночная и конкурентная разведка

Если вы хотите проникнуть на новый рынок и хотите оценить возможности, анализ данных поможет вам сделать взвешенное и адекватное решение.

3. Мониторинг соцсетей

YouScan, Brand Analytics и другие платформы для мониторинга соцсетей используют скрапинг.

4. Машинное обучение

С одной стороны, машинное обучение и AI используются для увеличения производительности скрапинга. С другой стороны, данные, полученные с его помощью, используют в машинном обучении.

Интернет — это важный источник данных для алгоритмов машинного обучения.

5. Модернизация сайтов

Компании переносят устаревшие сайты на современные платформы. Для того чтобы быстро и легко экспортировать данные, они могут использовать скрапинг.

6. Мониторинг новостей

Скрапинг данных из новостных сайтов и блогов позволяет отслеживать интересующие вас темы и экономит время.

7. Анализ эффективности контента

Блоггеры или создатели контента могут использовать скрапинг для извлечения данных о постах, видео, твитах и т. д. в таблицу, например, как на видео выше.

Данные в таком формате:

- легко сортируются и редактируются;

- просто добавить в БД;

- доступны для повторного использования;

- можно преобразовать в графики.

Сервисы для веб скрапинга

Скрапинг требует правильного парсинга исходного кода страницы, рендеринга JavaScript, преобразования данных в читаемый вид и, по необходимости, фильтрации. Поэтому существует множество готовых сервисов для выполнения скрапинга.

Вот топ-7 инструментов для скрапинга, которые хорошо справляются с этой задачей.

1. Octoparse

Octoparse — это простой в использовании скрапер для программистов и не только. У него есть бесплатный тарифный план и платная подписка.

Особенности:

- работает на всех сайтах: с бесконечным скроллом, пагинацией, авторизацией, выпадающими меню, AJAX и т.д.

- сохраняет данные в Excel, CSV, JSON, API или БД.

- данные хранятся в облаке.

- скрапинг по расписанию или в реальном времени.

- автоматическая смена IP для обхода блокировок.

- блокировка рекламы для ускорения загрузки и уменьшения количества HTTP запросов.

- можно использовать XPath и регулярные выражения.

- поддержка Windows и macOS.

- бесплатен для простых проектов, 75$/месяц — стандартный, 209$/месяц — профессиональный и т. д.

2. ScrapingBee

ScrapingBee Api использует «безголовый браузер» и смену прокси. Также имеет API для скрапинга результатов поиска Google.

Особенности:

- рендеринг JS;

- ротация прокси;

- можно использовать с Google Sheets и браузером Chrome;

- бесплатен до 1000 вызовов API, 29$/месяц — для фрилансеров, 99$/месяц — для бизнеса и т.д.

3. ScrapingBot

ScrapingBot предоставляет несколько API: API для сырого HTML, API для сайтов розничной торговли, API для скрапинга сайтов недвижимости.

Web scraping, also known as web data extraction, is a method of obtaining information from a website or application (in a human-readable format) and saving it to a table or file. This is not an illegal technique, but the ways of using these data can be illegal. In the next section, we will discuss the legal aspects of web scraping.

Legal Aspects of Web Scraping

In October 2020, Facebook filed a lawsuit against two companies accused of using two malicious browser extensions for Chrome. These extensions allowed scraping data without authorization from Facebook, Instagram, Twitter, LinkedIn, YouTube, and Amazon. Both extensions collected public and non-public user data. The companies sold these data, which were then used for market research.

Why Web Scraping is Important

Web scraping has a wide range of applications. For example, market researchers use it to optimize processes. By collecting information about products and their prices on Amazon and other platforms, you can monitor your competitors and adapt your pricing policy.

Top 7 Services for Web Scraping

Here are the top 7 services for web scraping that do not require writing code:

Conclusion

Web scraping is a powerful tool for extracting data from the web. With the right tools and services, you can extract valuable insights and make informed decisions. Remember to always follow the terms of service and respect the rights of others when scraping data.

Источник: https://lajfhak.ru-land.com/novosti/top-10-web-scraping-tools-2023-what-web-scraping

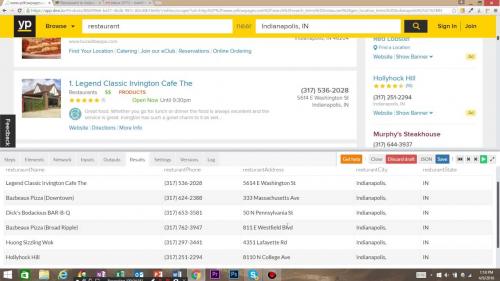

How to get Data from website. 16 Tools to extract Data from website

In today's business world, smart data-driven decisions are the number one priority. For this reason, companies track, monitor, and record information 24/7. The good news is there is plenty of public data on servers that can help businesses stay competitive.

The process of extracting data from web pages manually can be tiring, time-consuming, error-prone, and sometimes even impossible. That is why most web data analysis efforts use automated tools.

Web scraping is an automated method of collecting data from web pages. Data is extracted from web pages using software called web scrapers, which are basically web bots.

What is data extraction, and how does it work?

Data extraction or web scraping pursues a task to extract information from a source, process, and filter it to be later used for strategy building and decision-making. It may be part of digital marketing efforts, data science, and data analytics. The extracted data goes through the ETL process (extract, transform, load) and is then used for business intelligence (BI). This field is complicated, multi-layered, and informative. Everything starts with web scraping and the tactics on how it is extracted effectively.

Before automation tools, data extraction was performed at the code level, but it was not practical for day-to-day data scraping. Today, there are no-code or low-code robust data extraction tools that make the whole process significantly easier.

What are the use cases for data extraction?

To help data extraction meet business objectives, the extracted data needs to be used for a given purpose. The common use cases for web scraping may include but are not limited to:

- Online price monitoring: to dynamically change pricing and stay competitive.

- Real estate: data for building real-estate listings.

- News aggregation: as an alternative data for finance/hedge funds.

- Social media: scraping to get insights and metrics for social media strategy.

- Review aggregation: scraping gathers reviews from predefined brand and reputation management sources.

- Lead generation: the list of target websites is scraped to collect contact information.

- Search engine results: to support SEO strategy and monitor SERP.

Is it legal to extract data from websites?

Web scraping has become the primary method for typical data collection, but is it legal to use the data? There is no definite answer and strict regulation, but data extraction may be considered illegal if you use non-public information. Every tip described below targets publicly available data which is legal to extract. However, it is still illegal is to use the scrapped data for commercial purposes.

How to extract data from a website

Manually extracting data from a website (copy/pasting information to a spreadsheet) is time-consuming and difficult when dealing with big data. If the company has in-house developers, it is possible to build a web scraping pipeline. There are several ways of manual web scraping.

1. Code a web scraper with Python

It is possible to quickly build software with any general-purpose programming language like Java, JavaScript, PHP, C, C#, and so on. Nevertheless, Python is the top choice because of its simplicity and availability of libraries for developing a web scraper.

2. Use a data service

Data service is a professional web service providing research and data extraction according to business requirements. Similar services may be a good option if there is a budget for data extraction.

3. Use Excel for data extraction

This method may surprise you, but Microsoft Excel software can be a useful tool for data manipulation. With web scraping, you can easily get information saved in an excel sheet. The only problem is that this method can be used for extracting tables only.

4. Web scraping tools

Modern data extraction tools are the top robust no-code/low code solutions to support business processes. With three types of data extraction tools – batch processing, open-source, and cloud-based tools – you can create a cycle of web scraping and data analysis. So, let's review the best tools available on the market.