5 best Web Scraping Tools to Extract Online Data. Top 10 Web Scraping Tools for Online Data Extraction

- 5 best Web Scraping Tools to Extract Online Data. Top 10 Web Scraping Tools for Online Data Extraction

- Octoparse Premium Pricing & Packaging

- Data Scraping. Scraping Data

- Web scraper cloud. A CLOUD BASED WEB SCRAPER

- Web scraper tutorial. BeautifulSoup Library

- Web Scraping test. Web Scraping Tools

- Scrap. 9 documentation ¶

5 best Web Scraping Tools to Extract Online Data. Top 10 Web Scraping Tools for Online Data Extraction

List of the Best free Web Scraping Software and Tools for extracting data online without coding:

What is Web Scraping?

Web scraping is a technique that is used to extract data from websites. It is also called as Web harvesting.

This extracted data is saved either in a local file to the computer or to the database. It is the process in which data is collected automatically for the web.

How is Web Scraping performed?

In order to scrape data from a website, software or a program is used. This program is called Scraper. This program sends a GET request to the website from which the data needs to be scrapped.

There are two different methods for performing web scraping, one is accessing www via HTTP or a web browser and the second one is making use of bot or.

Web Scraping is considered as bad or illegal but it not always bad. Many times government websites make data available for public use. It is also made available through. However, as this work needs to be performed for a high data volume, Scrapers are used.

Uses of Web Scraping

Web Scraping is used for research work, sales, marketing, finance, e-commerce, etc. Many times, it is used to know more about your competitors.

The following image will show you the typical uses of web scraping and their percentage.

Pro Tip: While selecting the tool for web scraping one should consider the output formats supported by the tool, its capability for scraping the modern websites ( Example: support for Ajax controls), its pricing plans, and its automation and reporting capabilities.

=>>to suggest a listing here.

Enlisted below are the top Web Scraping Tools that you should know.

| Smartproxy | Effortlessly scrape web data you need | JSON, HTML | Individuals and Businesses | Available | Lite: $50/month Basic: $100/month Standard: $280/month, $480/month |

| Nimble | Collect data from any website effortlessly | -- | Individuals and businesses | Available | Starts at $300/month. |

| ScraperAPI | We handle 2 billion API requests per month for over 1,000 businesses and developers around the world | TXT, HTML CSV, or Excel formats | Small, medium, enterprise as well as individuals | Available | 1000 free API calls Then starts with $29 per month only. (See Discount Below) |

| Web Scraper | Chrome extension: A free tool to scrape dynamic web pages. | -- | Available | Free: Browser extension. Project: $50/month. Professional: $100/month. Business: $200/month. Scale: $300/month. | |

| Grepsr | Web Scraping service platform that’s effortless. | XML, XLS, CSV, & JSON | Every one. | You can sign up for free | Starter Plan: Starts at $129/site for 50K records. Monthly Plan: Starts at $99/site. Enterprise Plan: (Get a quote) |

| ParseHub | A web scraping tool that is easy to use. | JSON, Excel, and API. | Executives, Data Scientists, software developers, business analysts, pricing analysts, consultants, marketing professionals etc. | Free plan available. | Free plan for everyone. Standard: $149 per month, Professional: $499 per month, & Enterprise: Get a quote. |

Let’s see the detailed review of each tool on the list.

Price: Smartproxy has a flexible plan for their APIs, depending on the amount of requests you need. Their monthly subscription costs vary from $50 to $480 for smaller, up to 400K requests, plans that you can subscribe to on their dash. For businesses that require more scalability, Smartproxy offers enterprise plans depending on their use case, target, and project scope.

Those using proxies for data collection, can spend as little as $12.5 per GB for residential proxies. The smallest subscription plan for the fixed amount of 8 GBs per month is $80.

Octoparse Premium Pricing & Packaging

5 Day Money Back Guarantee on All Octoparse Plans

- All features in Free, plus:

- 100 tasks

- Run tasks with up to 6 concurrent cloud processes

- IP rotation

- Local boost mode

- 100+ preset task templates

- IP proxies

- CAPTCHA solving

- Image & file download

- Automatic export

- Task scheduling

- API access

- Standard support

Professional Plan

Ideal for medium-sized businesses $249 / Month

when billed monthly

(OR $209/MO when billed annually) Buy Now Apply for Free Trial

- All features in Standard, plus:

- 250 tasks

- Up to 20 concurrent cloud processes

- Advanced API

- Auto backup data to cloud

- Priority support

- Task review & 1-on-1 training

Enjoy all the Pro features, plus scalable concurrent processors, multi-role access,

tailored onboarding, priority instant chat support, enterprise-level automation and

integration Simply relax and leave the work to us.

Our data team will meet with you to

discuss your web crawling and data

processing requirements.Enterprise

For businesses with high capacity

requirements Data Service

Starting from $399 Crawler Service

Starting from $250 Enterprise

Starting from $4899 / Year Data Service

Starting from $399

Data Scraping. Scraping Data

Rapid growth of the World Wide Web has significantly changed the way we share, collect, and publish data. Vast amount of information is being stored online, both in structured and unstructured forms. Regarding certain questions or research topics, this has resulted in a new problem - no longer is the concern of data scarcity and inaccessibility but, rather, one of overcoming the tangled masses of online data.

Collecting data from the web is not an easy process as there are many technologies used to distribute web content (i.e.,,). Therefore, dealing with more advanced web scraping requires familiarity in accessing data stored in these technologies via R. Through this section I will provide an introduction to some of the fundamental tools required to perform basic web scraping. This includes importing spreadsheet data files stored online, scraping HTML text, scraping HTML table data, and leveraging APIs to scrape data.

My purpose in the following sections is to discuss these topics at a level meant to get you started in web scraping; however, this area is vast and complex and this chapter will far from provide you expertise level insight. To advance your knowledge I highly recommend getting copies ofand

Note: the examples provided below were performed in 2015. Consequently, if you apply the code provide throughout these examples your outputs may differ due to webpages and their content changing over time.

Importing Spreadsheet Data Files Stored Online

The most basic form of getting data from online is to import tabular (i.e. .txt, .csv) or Excel files that are being hosted online. This is often not considered web scraping ; however, I think its a good place to start introducing the user to interacting with the web for obtaining data. Importing tabular data is especially common for the many types of government data available online. A quick perusal ofillustrates over 190,000 examples. In fact, we can provide our first example of importing online tabular data by downloading the Data.gov .csv file that lists all the federal agencies that supply data to Data.gov.

# the url for the online CSV url

Downloading Excel spreadsheets hosted online can be performed just as easily. Recall that there is not a base R function for importing Excel data; however, several packages exist to handle this capability. One package that works smoothly with pulling Excel data from urls is. Withgdatawe can useread.xls()to download thisExcel file from the given url.

Note that many of the arguments covered in the(i.e. specifying sheets to read from, skipping lines) also apply toread.xls(). In addition,gdataprovides some useful functions (sheetCount()andsheetNames()) for identifying if multiple sheets exist prior to downloading. Check out gdatafor more help.

Special note when using gdata on Windows: When downloading excel spreadsheets from the internet, Mac users will be able to install the

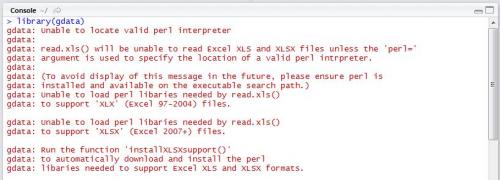

If you are a Windows user and attempt to attach thegdatalibrary immediately after installation, you will likely receive the warning message given in Figure 1. You will not be able to download excel spreadsheets from the internet without installing some additional software.

gdata without Perl

Unfortunately, it’s not as straightforward to fix as the error message would indicate. RunninginstallXLSXsupport()won’t completely solve your problem without Perl software installed. In order for gdata to function properly, you must install ActiveState Perl using the following link: http://www.activestate.com/activeperl/. The download could take up to 10 minutes or so, and when finished, you will need to find where the software was stored on your machine (likely directly on the C:/ Drive).

Once Perl software has been installed, you will need to direct R to find it each time you call the function.

Another common form of file storage is using zip files. For instance, the(BLS) stores theirfor thein .zip files. We can usedownload.file()to download the file to your working directory and then work with this data as desired.

Web scraper cloud. A CLOUD BASED WEB SCRAPER

Introduction

In this article, we will guide you through the process of building a web scraper and setting it up to run autonomously on the cloud. It's important to understand what web scraping is before we delve into deployment. According to Wikipedia, web scraping is the process of extracting data from websites. There are various reasons one might want to extract data from a website, such as for analytical purposes or personal use. The use case will depend on the specific needs and goals of the individual or organization. If you're interested in learning more about web scraping, you can check out the Wikipedia article linked here:. It provides a comprehensive overview of the topic.

There are several techniques for web scraping that can be implemented using a variety of programming languages. In this article, we will be using the Python programming language. Don't worry if you're not familiar with Python, as we will be explaining each step in detail. If you do have a basic understanding of Python syntax, this should be a fairly easy process.

Our web scraper will be tasked with extracting news articles from a specific news website. The main reason for creating an autonomous web scraper is to extract data that is constantly being updated, such as news articles. This allows us to easily gather and analyze the latest information from a particular site. So, let's get started and build our web scraper!

Disclaimer: before scraping any website be sure to read their user terms and conditions. Some sites may take legal action if you don't follow usage guidelines.

Platforms and services

In this section, we will provide an overview of the platforms and services we will be using to create a cloud-based web scraper as an example. We will briefly explain the purpose and function of each platform or service to give you a better understanding of how they will be used in the process.

- IBM cloud platform: this will be our cloud platform of choice, for the reason being that you can access several services without having to provide credit card information. For our example we'll get to work with :

- Cloud functions service: this service will allow us to execute our web scraper on the cloud.

- Cloudant: a non-relational, distributed database service. We'll use this to store the data we scrape.

- Docker container platform: this platform we'll allow us to containerize our web scraper in a well defined environment with all necessary dependencies. This action allows our web scraper to work on any given platform that supports docker containers. In our example our docker container will be used by the ibm cloud functions service .

- Github: we'll use Github for version control and also to link to our docker container. Linking our docker container to a github repository containing our web scraper will automatically initiate a new build for our docker container image. The new image will carry all changes made to the repository's content.

- Cloud phantomjs platform: this platform will help render the web pages from the HTTP requests we'll make on the cloud. Once a page is rendered the response is returned as HTML.

- Rapid API platform: this platform will help manage our API calls to the cloud phantomjs platform and also provide an interface that shows execution statistics.

Web scraper tutorial. BeautifulSoup Library

BeautifulSoup is used extract information from the HTML and XML files. It provides a parse tree and the functions to navigate, search or modify this parse tree.

- Beautiful Soup is a Python library used to pull the data out of HTML and XML files for web scraping purposes. It produces a parse tree from page source code that can be utilized to drag data hierarchically and more legibly.

Features of Beautiful Soup

Beautiful Soup is a Python library developed for quick reversal projects like screen-scraping. Three features make it powerful:

1. Beautiful Soup provides a few simple methods and Pythonic phrases for guiding, searching, and changing a parse tree: a toolkit for studying a document and removing what you need. It doesn’t take much code to document an application.

2. Beautiful Soup automatically converts incoming records to Unicode and outgoing forms to UTF-8. You don’t have to think about encodings unless the document doesn’t define an encoding, and Beautiful Soup can’t catch one. Then you just have to choose the original encoding.

3. Beautiful Soup sits on top of famous Python parsers like LXML and HTML, allowing you to try different parsing strategies or trade speed for flexibility.

Источник: https://lajfhak.ru-land.com/stati/top-13-web-scraping-tools-2023-so-what-does-web-scraper-do

Web Scraping test. Web Scraping Tools

This is the most popular web scraping method where a business deploys an already made software for all their web scraping use cases.

If you want to access and gather data at scale, you need good web scraping tools that can surpass IP blocking, cloaking, and ReCaptcha. There are popular tools such as Scrapy, Beautiful Soup, Scrapebox, Scrapy Proxy Middleware, Octoparse, Parsehub, and Apify.

These tools help you with your web scraping task at scale and can surpass different obstacles to help you achieve your goals.

Selenium is a popular open-source web automation framework used for automated browser testing . This framework helps you write Selenium test scripts that can be used to automate testing of websites and web applications, then execute them in different browsers on multiple platforms using any programming language of your choice. However, it can be adapted to solve dynamic web scraping problems, as we will demonstrate in the blog on how you can do web scraping using JavaScript and Selenium.

Selenium has three major components:

- Selenium IDE : It is a browser plugin – a faster, easier way to create, execute, and debug your Selenium scripts.

- Selenium WebDriver: It is a set of portable APIs that help you write automated tests in any language that runs on top of your browser.

- Selenium Grid: It automates the process of distributing and scaling tests across multiple browsers, operating systems, and platforms.

Scrap. 9 documentation ¶

Scrapy is a fast high-levelandframework, used to crawl websites and extract structured data from their pages. It can be used for a wide range of purposes, from data mining to monitoring and automated testing.

Getting help

Having trouble? We’d like to help!

Try the FAQ – it’s got answers to some common questions.

Ask or search questions in StackOverflow using the scrapy tag .

Ask or search questions in the Scrapy subreddit .

Search for questions on the archives of the.

Ask a question in the #scrapy IRC channel ,

Report bugs with Scrapy in our issue tracker .

Join the Discord community Scrapy Discord .

First steps

Understand what Scrapy is and how it can help you.

Get Scrapy installed on your computer.

Write your first Scrapy project.

-

Basic concepts

Learn about the command-line tool used to manage your Scrapy project.

Write the rules to crawl your websites.

Extract the data from web pages using XPath.

Test your extraction code in an interactive environment.

Define the data you want to scrape.

Populate your items with the extracted data.

Post-process and store your scraped data.

Output your scraped data using different formats and storages.

Understand the classes used to represent HTTP requests and responses.

Convenient classes to extract links to follow from pages.

Learn how to configure Scrapy and see all.

See all available exceptions and their meaning.

Built-in services

Learn how to use Python’s builtin logging on Scrapy.

Collect statistics about your scraping crawler.

Send email notifications when certain events occur.

Inspect a running crawler using a built-in Python console.

Solving specific problems

Get answers to most frequently asked questions.

Learn how to debug common problems of your Scrapy spider.

Learn how to use contracts for testing your spiders.

Get familiar with some Scrapy common practices.

Tune Scrapy for crawling a lot domains in parallel.

Learn how to scrape with your browser’s developer tools.

Read webpage data that is loaded dynamically.

Learn how to find and get rid of memory leaks in your crawler.

Download files and/or images associated with your scraped items.

Deploying your Scrapy spiders and run them in a remote server.

Adjust crawl rate dynamically based on load.

Check how Scrapy performs on your hardware.

Learn how to pause and resume crawls for large spiders.