Best Alternatives for Scrapy Web Scraping. Why do we need alternatives to Scrapy?

- Best Alternatives for Scrapy Web Scraping. Why do we need alternatives to Scrapy?

- Apify demo. Building Apify integrations in Transposit

- Scrapydweb alternatives. Projects that are alternatives of or similar to Scrapydweb

- Crawlee vs Scrapy. Focused vs. Broad Crawling

- Apify sdk. Overview

- Scrapy example. Introducing Scrapy

- Scrapy-playwright. Scrapy Splash

- Scrapy Alternatives for Web Scraping & Crawling

Best Alternatives for Scrapy Web Scraping. Why do we need alternatives to Scrapy?

Like every coin has two sides, Scrapy also comes with its limitations. Thus, people are still looking for alternatives to Scrapy.

Let us understand what it is in Scrapy that people are unhappy about.

1. Learn to code

As Scrapy works on coding, you have to be well-versed with the coding languages like Python . Any non-technician cannot acquire this knowledge. Hence, it becomes mandatory for a company to hire a technician who can use Scrapy for extracting data after high-level web crawling.

Any person will take nearly a month to learn these coding languages. It becomes a time-consuming task since the learning curve is high.

Moreover, you need to invest in the resources to learn Scrapy. As it works on coding, it doesn’t have an easy-to-use interface. Hence, you have to spend a high amount to learn Scrapy.

Additionally, maintaining Scrapy is also expensive. You need to purchase its different parts and integrate them with the system to make Scrapy work.

2. Deploy cloud / Difficult to scale

start with why- data processing server

Before choosing any web scraper, it is important to check its scalability. If a tool cannot scrape multiple pages simultaneously, it will become a time-consuming task. While using Scrapy, you use your local system to scrape web pages. Hence, it will only be able to scrape the number of pages that your data processing server supports. Once you reach its limit, scraping multiple pages may become an issue for you. To solve this problem, you will have to deploy cloud and increase the scalability of the tool.

For deploying the cloud, you’ll have to purchase the cloud and integrate it with Scrapy separately. It doesn’t have an inbuilt cloud platform that can help you as a one-stop solution. Hence, the entire process consumes time and effort, which the businesses find disappointing.

3. Integrate proxy to avoid blocking

Once you start scraping the web pages, your IP address gets recorded. After a point of time, due to unusual visits to the web pages through the same IP address, your scraper may not be able to access them. It is because your IP address has been blocked. But how do you still extract the data you want?

For this, you will have to use proxies. Proxies are the IP addresses of others that you use for the time being to access your required websites. By using proxies, your scraper will not get blocked anymore by the websites.

However, in Scrapy, the proxy feature doesn’t come as an in-built setting. Thus, you have to integrate proxies to Scrapy separately. It becomes an additional expense for the companies since you can only avail proxies by purchasing them from third parties.

4. Doesn’t handle JS

Scrapy is incapable of handling JavaScript on its own. Although it provides the services, it has to depend on Splash to accomplish the task for you.

Like every coin has two sides, Scrapy also comes with its limitations. Thus, people are still looking for alternatives to Scrapy.

What's not to like about Scrapy?

As Scrapy works on coding, you have to be well-versed with the coding languages like Python. Any non-technician cannot acquire this knowledge. Hence, it becomes mandatory for a company to hire a technician who can use Scrapy for extracting data after high-level web crawling.

Any person will take nearly a month to learn these coding languages. It becomes a time-consuming task since the learning curve is high.

Moreover, you need to invest in the resources to learn Scrapy. As it works on coding, it doesn’t have an easy-to-use interface. Hence, you have to spend a high amount to learn Scrapy.

Additionally, maintaining Scrapy is also expensive. You need to purchase its different parts and integrate them with the system to make Scrapy work.

Why do we need alternatives to Scrapy?

Before choosing any web scraper, it is important to check its scalability. If a tool cannot scrape multiple pages simultaneously, it will become a time-consuming task. While using Scrapy, you use your local system to scrape web pages. Hence, it will only be able to scrape the number of pages that your data processing server supports. Once you reach its limit, scraping multiple pages may become an issue for you. To solve this problem, you will have to deploy cloud and increase the scalability of the tool.

Start with why - data processing server

Some of the best alternatives to Scrapy include:

Apify demo. Building Apify integrations in Transposit

You’ve found documentation about extending the Transposit Developer Platform, which is accessible on request. In most cases, you can now set up and use Transposit without needing to extend the Transposit Developer Platform.for the related docs and support.

Apify is the place to find, develop, buy and run cloud programs called actors. These actors allow you to perform web scraping, data extraction or automation tasks on any website, regardless whether it has an API or not. Their actors, tasks, and datasets allow you and your team to have the data of the web at your fingertips.

The best Apify solutions are ones that are able to relay their information with as little manual input as possible. If the Apify Store does not have the solution that works for you, Transposit provides the perfect platform for you to begin developing your own app with any Apify actor you like. Transposit handles the overhead so you get to focus on the fun. Imagine connecting Apify to Slack, Google Sheets, or Twilio without worrying about auth!

Key Features

Managed authentication

Your Apify integrations need to work with cloud services on behalf of your users. Transposit manages users, their credentials, refreshing tokens, and storing them securely.

User customization

When you’re building a custom Apify integration you want it to be, well, custom! Transposit gives a simple mechanism to let users customize: specify a custom start URL, sync run information to Slack, query your datasets as if they were in SQL

Broad API support

Connect and authenticate in seconds to the services you use. Experiment interactively rather than scouring documentation. Check out the growing list-none of connectors here

Up-level your work

Transposit’s relational engine puts SQL in front of any API. And lets you mix in JavaScript when you need. Write less code in a language designed to manipulate, transform, and compose data.

Severless

Build your logic and let Transposit host it for you. Build and deploy an app for your entire team in minutes. Really.

Fork and customize examples

Sample applications

Here's a few starting points. Try them out, view the code, fork a copy, customize to suit your needs.

Pridebot

A bot that matches you with the soonest Pride event in your city, using Twilio and Apify.

View code

Amazon Review Scraper

Copy over all Amazon reviews of a product to a new Google Sheet.

View code

Apify Demo

Sample API calls to start using Apify in Transposit.

Scrapydweb alternatives. Projects that are alternatives of or similar to Scrapydweb

logparser

A tool for parsing Scrapy log files periodically and incrementally, extending the HTTP JSON API of Scrapyd.

Stars : ✭ 70 (-97.06%)

Mutual labels: scrapy , scrapyd , log-parsing , scrapy-log-analysis , scrapyd-log-analysis

Spiderkeeper

admin ui for scrapy/open source scrapinghub

Stars : ✭ 2,562 (+7.42%)

Mutual labels: spider , scrapy , dashboard , scrapyd , scrapyd-ui

Gerapy

Distributed Crawler Management Framework Based on Scrapy, Scrapyd, Django and Vue.js

Stars : ✭ 2,601 (+9.06%)

Mutual labels: spider , scrapy , dashboard , scrapyd

Crawlab

Distributed web crawler admin platform for spiders management regardless of languages and frameworks. 分布式爬虫管理平台,支持任何语言和框架

Stars : ✭ 8,392 (+251.87%)

Mutual labels: spider , scrapy , scrapyd-ui

scrapy-admin

A django admin site for scrapy

Stars : ✭ 44 (-98.16%)

Mutual labels: spider , scrapy , scrapyd

Alipayspider Scrapy

AlipaySpider on Scrapy(use chrome driver); 支付宝爬虫(基于Scrapy)

Stars : ✭ 70 (-97.06%)

Mutual labels: spider , scrapy

Image Downloader

Download images from Google, Bing, Baidu. 谷歌、百度、必应图片下载.

Crawlee vs Scrapy. Focused vs. Broad Crawling

Before getting into the meat of the comparison let’s take a step back and look at two different use cases for web crawlers: Focused crawls and broad crawls .

In a focused crawl you are interested in a specific set of pages (usually a specific domain). For example, you may want to crawl all product pages on amazon.com. In a broad crawl the set of pages you are interested in is either very large or unlimited and spread across many domains. That’s usually what search engines are doing. This isn’t a black-and-white distinction. It’s a continuum. A focused crawl with many domains (or multiple focused crawls performed simultaneously) will essentially approach the properties of a broad crawl.

Now, why is this important? Because focused crawls have a different bottleneck than broad crawls.

When crawling one domain (such as amazon.com) you are essentially limited by your politeness policy . You don’t want to overwhelm the server with thousands of requests per second or you’ll get blocked. Thus, you need to impose an artificial limit of requests per second. This limit is usually based on server response time. Due to this artificial limit, most of the CPU or network resources of your server will be idle. Having a distributed crawler using thousands of machines will not make a focused crawl go any faster than running it on your laptop.

Architecture of a Web crawler.

In the case of broad crawl, the bottleneck is the performance and scalability of the crawler. Because you need to request pages from different domains you can potentially perform millions of requests per second without overwhelming a specific server. You are limited by the number of machines you have, their CPU, network bandwidth, and how well your crawler can make use of these resources.

If all you want it scrapes data from a couple of domains then looking for a web-scale crawler may be overkill. In this case take a look at services like import.io (from $299 monthly), which is great at scraping specific data items from web pages.

Scrapy (described below) is also an excellent choice for focused crawls.

Apify sdk. Overview

The Apify SDK is available as theNPM package and it provides the following tools:

Actor - Serves as an alternative approach to the static helpers exported from the package.

This class can be used to control the current actor run and to interact with the actor's environment.

ApifyClient - Allows user to interact with the Apify platform from code, control and schedule actors on the platform and access the result data stores.

Configuration - Helper class encapsulating the configuration of the current actor run.

PlatformEventManager - Event emitter for the platform and SDK events. Can be used to track actor run performance or serverless container migration.

ProxyConfiguration - Configures connection to a proxy server with the provided options. Setting proxy configuration in your crawlers automatically configures them to use the selected proxies for all connections. The proxy servers are managed by Apify Proxy.

RequestQueue - Represents a queue of URLs to crawl,

which is stored either on a local filesystem or in the Apify Cloud . The queue is used

for deep crawling of websites, where you start with several URLs and then recursively follow links to other pages.

The data structure supports both breadth-first and depth-first crawling orders.

Dataset - Provides a store for structured data and enables their export

to formats like JSON, JSONL, CSV, XML, Excel or HTML. The data is stored on a local filesystem or in the Apify Cloud.

Datasets are useful for storing and sharing large tabular crawling results, such as a list of products or real estate offers.

KeyValueStore - A simple key-value store for arbitrary data

records or files, along with their MIME content type. It is ideal for saving screenshots of web pages, PDFs

or to persist the state of your crawlers. The data is stored on a local filesystem or in the Apify Cloud.

Scrapy example. Introducing Scrapy

A framework is a reusable, “semi-complete” application that can be specialized to produce custom applications. (Source: Johnson & Foote, 1988 )

In other words, the Scrapy framework provides a set of Python scripts that contain most of the code required to use Python for web scraping. We need only to add the last bit of code required to tell Python what pages to visit, what information to extract from those pages, and what to do with it. Scrapy also comes with a set of scripts to setup a new project and to control the scrapers that we will create.

It also means that Scrapy doesn’t work on its own. It requires a working Python installation (Python 2.7 and higher or 3.4 and higher - it should work in both Python 2 and 3), and a series of libraries to work. If you haven’t installed Python or Scrapy on your machine, you can refer to the setup instructions . If you install Scrapy as suggested there, it should take care to install all required libraries as well.

scrapy version

in a shell. If all is good, you should get the following back (as of February 2017):

Scrapy 2.1.0

If you have a newer version, you should be fine as well.

To introduce the use of Scrapy, we will reuse the same example we used in the previous section. We will start by scraping a list of URLs from the list of faculty of the Psychological & Brain Sciences and then visit those URLs to scrape detailed information about those faculty members.

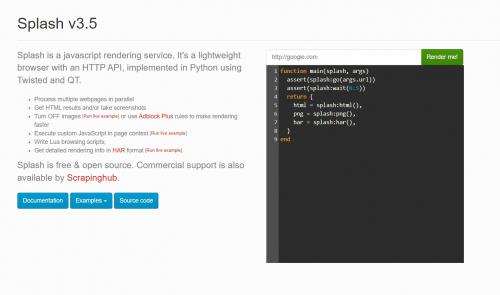

Scrapy-playwright. Scrapy Splash

Like other headless browsers you can tell Scrapy Splash to do certain actions before returning the HTML response to your spider.

Splash can:

- Wait for page elements to load

- Scroll the page

- Click on page elements

- Take screenshots

- Turn off images or use Adblock rules to make rendering faster

It has comprehensive documentation, has been heavily battletested for scraping andso you don't need to manage the browsers themselves.

The main drawbacks with Splash is that it can be a bit harder to get started as a beginner, as you to run the Splash docker image and to control the browser you use Lua scripts. But once you get familiar with Splash it can cover most scraping tasks.

Scrapy Splash Integration

Getting up and running with Splash isn't quite as straight forward as other options but is still simple enough:

1. Download Scrapy Splash

and you should see the following screen.

If you do then, Scrapy Splash is up and running correctly.

Scrapy Alternatives for Web Scraping & Crawling

No doubt, Scrapy is a force to reckon with among the Python developer community for the development of scalable web scrapers and crawlers. However, it is still not the best tool for everyone.

If you are looking for an alternative to the Scrapy framework, then this section has been written for you as we would be describing some of the top Scrapy frameworks you can use below.

1. Requests + BeautifulSoup — Best Beginner Libraries for Web Scraping

The best alternative to the Scrapy web crawling framework for web scraping is not one tool but the combination of libraries. Web scraping entails sending web requests to download web pages and then parsing the document to extract the data point of interest. The Requests library is meant for handling HTTP requests and makes doing so easier and with fewer lines of code compared to the urllib.request module in the standard python library. It also handles exceptions better. This makes its usage and debugging better.

On the other hand, BeautifulSoup is meant for extracting data from pages you download using Requests. It is not a parsing library as others think. Instead, it depends on a parsing library such as html.parser or the html5 parser to traverse and locate the data point of interest. The duo of Requests and BeautifulSoup are the most popular libraries for web scraping and are used mostly in beginner tutorials for web scraping.

Read more,

- Scrapy Vs. Beautifulsoup Vs. Selenium for Web Scraping

- Python Web Scraping Libraries and Framework

2. Selenium — Best for All Programming Languages

Selenium is also one of the best alternatives to Scrapy. To be honest with you, Selenium isn’t what you will want to use for all of your web scraping projects as it is slow compared to most other tools described in this article. However, the advantage it has over Scrapy is its support for rendering Javascript which Scrapy lacks. It does this by automating web browsers and then using its API to access and interact with content on the web page. The browsers it automates include Chrome, Firefox, Edge, and Safari. It also does have support for PhantomJS which is depreciated for now.

Selenium has what it calls the headless mode. In the headless mode, browsers are not launched in a visible mode. Instead, they are invisible and you wouldn’t know a browser is launched. The head mode or visible mode should be used only for debugging as it slows the system down more. Selenium is also free and has the advantage of being usable in popular programming languages such as Python, NodeJS, and Java, among others.

Read more,

- Web Scraping Using Selenium and Python

3. Puppeteer — Best Scrapy Alternative for NodeJS

Puppeteer is a Node library that provides a high-level API to control Chrome or Chromium over the DevTools protocol. Scrapy is meant for only Python programming. If you need to develop a NodeJS-based script/application, the Puppeteer library is the best option for you. Unlike Scrapy, the Puppeteer tool does render Javascript, putting it in the same class as Selenium. However, it does have the advantage of being faster and easier to debug when compared to Selenium only that it is meant only for the NodeJS platform.

The Puppeteer library runs Chrome in the headless mode by default — you will need to configure it if you need the head mode for debugging. Some of the things you can do with Puppeteer include taking screenshots and converting pages to PDF files. You can also test Chrome extensions using this library. Puppeteer downloads the latest version of Chrome by default for compatibility sake. If you do not want this, you should download the Puppeteer core alternative.