Which Are The Best Web Scraper Options to Replace.. What Defines the Best Tools for Web Scraping?

- Which Are The Best Web Scraper Options to Replace.. What Defines the Best Tools for Web Scraping?

- Web Scraping api. Top 10 Best Web Scraping APIs & Alternatives (2021)

- How to Parse website python. Scrape and Parse Text From Websites

- Data Scraping. Scraping Data

- Simple Scraper chrome extension. Что такое парсинг?

- Bot to extract Data From website. Web Data Scraper

- Site parser. По используемому фреймворку

- Best website Scraper. 10 Best Web Scraping Tools for Digital Marketers

Which Are The Best Web Scraper Options to Replace.. What Defines the Best Tools for Web Scraping?

If you want to see our thoughts about these products at a glance, check out the rating scale we include with each heading. This 1-10 scale takes the following things into consideration:

Cost

It’s no secret that this is one of the first (if not the first) factors people want to know about when choosing a product, and finding the best web scraper service is no different. However, since not all web scrapers are created equal, giving the best cost ratings to the cheapest products doesn’t really tell the whole story. So it’s important to consider the other factors on this list, too.

Ease of use

Ding, ding, ding, now this is what I’m talking about. If you’re like me (which, since you’re reading this article, I assume that you are), you don’t want to spend hella time figuring out how to use a tool. Especially when you’re not fully convinced about the importance of a data scraping software anyway. (Yet.)

Scraping Robot believes that scraping should be available to everyone, not just coding experts and developers. That’s why “Ease of use” is one of the first things I considered with each product in this article. Does the company offer tutorials? If so, what’s the content and quality of those tutorials and instructions? When you’re just getting started with web scraping, it can be hard to understand how the scraper works and what input it needs from you to do its job. If the site doesn’t use tutorials, that’s a big indicator that you’re probably going to have to put a lot of time and effort into just learning the product, and I don’t want to do that. So, no tutorials (or bad tutorials) = no points for this category. Sorry not sorry.

Javascript rendering

If your scraper can “render Javascript,” as they say, then your pool of websites just got a whole lot bigger (and more useful!) That’s because you can scrape dynamic sites, or sites that are programmed to change based on input from their users (e.g. Google Maps.) A lot of scrapers don’t use javascript because it’s so much more expensive to run on the hardware, and it can take forever to load and scrape multiple pages at a time with Javascript. But most modern websites have Javascript implemented at least in some form, so the ability to render that kind of code really increases the quality and quantity of data you can access with your scraper.

Desktop software

Some tools allow you to scrape the web using browser extensions or simple modules, but many scrapers also offer a desktop app option. Depending on the type of scraping you want to do, both tools are super helpful. However, if you’re going to use a desktop scraper, you want to make sure it supports a robust proxy management system. In layman’s terms, that means your scraper can handle high volumes of proxy requests without slowing down or getting banned.

Web Scraping api. Top 10 Best Web Scraping APIs & Alternatives (2021)

A web scrapping API is software that allows users and developers to scrape information from websites without getting being detected. The APIs implement Captcha avoidance and IP rotation strategies to execute the users' search requests.

What is the best Web Scraping API?

After reviewing all the Web Scraping APIs, we found these 10 APIs to be the very best and worth mentioning:

- ScrapingBee API

- Scrapper’s Proxy API

- ScrapingAnt API

- ScrapingMonkey API

- AI Web Scraper API

- Site Scraper API

- ScrapeGoat API

- Scrappet API

- Scraper – Crawler – Extract API

- Scraper Box API

Web Scraping

| ScrapingBee | Best for Rotating proxies | Connect to API |

| Scrapper’s Proxy | Best for Proxies for faster speeds and higher success rates | Connect to API |

| ScrapingAnt | Best for Customizing browser settings | Connect to API |

| ScrapingMonkey | Connect to API | |

| AI Web Scraper | Best for Intelligent web page extraction using AI algorithms | Connect to API |

| Site Scraper | Best for Fetching site titles | Connect to API |

| ScrapeGoat | Best for Web page screenshots and SPA applications pre-rendering | Connect to API |

| Scrappet | Best for Web page data extraction using URLs | Connect to API |

| Scraper – Crawler – Extract | Best for Associated website links and browsing URLs | Connect to API |

| Scraper Box | Best for Data extraction without blockades | Connect to API |

Our Top Picks for Best Web Scraping APIs

1. ScrapingBee

ScrapingBee fetches URLs for specific websites from which data is to be scrapped.

This API allows the users to have seamless data extraction as it eliminates any challenges that may arise during the process. It helps in resolving CAPTCHA, supports deployment of headless Chrome browser and custom cookies.

The API also supports JavaScript rendering allowing the users to scrape data with Vue.js, AngularJS and React. This feature helps the users to execute JavaScript snippets using custom wait. Once the requests are received and processed, the API returns the data in HTML supported formats. Among the key benefits of this API is that it supports rotating proxies allowing the users to surpass the website rate limits. The result of the rotating proxies is a large proxy pool and Geotargeting.

The users can benefit from the documentation provided to understand the workings of the API quickly.

How to Parse website python. Scrape and Parse Text From Websites

Collecting data from websites using an automated process is known as web scraping. Some websites explicitly forbid users from scraping their data with automated tools like the ones that you’ll create in this tutorial. Websites do this for two possible reasons:

- The site has a good reason to protect its data. For instance, Google Maps doesn’t let you request too many results too quickly.

- Making many repeated requests to a website’s server may use up bandwidth, slowing down the website for other users and potentially overloading the server such that the website stops responding entirely.

Before using your Python skills for web scraping, you should always check your target website’s acceptable use policy to see if accessing the website with automated tools is a violation of its terms of use. Legally, web scraping against the wishes of a website is very much a gray area.

Important: Please be aware that the following techniqueswhen used on websites that prohibit web scraping.

For this tutorial, you’ll use a page that’s hosted on Real Python’s server. The page that you’ll access has been set up for use with this tutorial.

Now that you’ve read the disclaimer, you can get to the fun stuff. In the next section, you’ll start grabbing all the HTML code from a single web page.

Build Your First Web Scraper

One useful package for web scraping that you can find in Python’s standard library isurllib, which contains tools for working with URLs. In particular, the urllib.request module contains a function calledurlopen()that you can use to open a URL within a program.

In IDLE’s interactive window, type the following to importurlopen():

The web page that you’ll open is at the following URL:

To open the web page, passurltourlopen():

urlopen()returns anHTTPResponseobject:

To extract the HTML from the page, first use theHTTPResponseobject’s.read()method, which returns a sequence of bytes. Then use.decode()to decode the bytes to a string using:

Now you can print the HTML to see the contents of the web page:

The output that you’re seeing is the HTML code of the website, which your browser renders when you visithttp://olympus.realpython.org/profiles/aphrodite:

Withurllib, you accessed the website similarly to how you would in your browser. However, instead of rendering the content visually, you grabbed the source code as text. Now that you have the HTML as text, you can extract information from it in a couple of different ways.

Data Scraping. Scraping Data

Rapid growth of the World Wide Web has significantly changed the way we share, collect, and publish data. Vast amount of information is being stored online, both in structured and unstructured forms. Regarding certain questions or research topics, this has resulted in a new problem - no longer is the concern of data scarcity and inaccessibility but, rather, one of overcoming the tangled masses of online data.

Collecting data from the web is not an easy process as there are many technologies used to distribute web content (i.e.,,). Therefore, dealing with more advanced web scraping requires familiarity in accessing data stored in these technologies via R. Through this section I will provide an introduction to some of the fundamental tools required to perform basic web scraping. This includes importing spreadsheet data files stored online, scraping HTML text, scraping HTML table data, and leveraging APIs to scrape data.

My purpose in the following sections is to discuss these topics at a level meant to get you started in web scraping; however, this area is vast and complex and this chapter will far from provide you expertise level insight. To advance your knowledge I highly recommend getting copies ofand

Note: the examples provided below were performed in 2015. Consequently, if you apply the code provide throughout these examples your outputs may differ due to webpages and their content changing over time.

Importing Spreadsheet Data Files Stored Online

The most basic form of getting data from online is to import tabular (i.e. .txt, .csv) or Excel files that are being hosted online. This is often not considered web scraping ; however, I think its a good place to start introducing the user to interacting with the web for obtaining data. Importing tabular data is especially common for the many types of government data available online. A quick perusal ofillustrates over 190,000 examples. In fact, we can provide our first example of importing online tabular data by downloading the Data.gov .csv file that lists all the federal agencies that supply data to Data.gov.

# the url for the online CSV url

Downloading Excel spreadsheets hosted online can be performed just as easily. Recall that there is not a base R function for importing Excel data; however, several packages exist to handle this capability. One package that works smoothly with pulling Excel data from urls is. Withgdatawe can useread.xls()to download thisExcel file from the given url.

Note that many of the arguments covered in the(i.e. specifying sheets to read from, skipping lines) also apply toread.xls(). In addition,gdataprovides some useful functions (sheetCount()andsheetNames()) for identifying if multiple sheets exist prior to downloading. Check out gdatafor more help.

Special note when using gdata on Windows: When downloading excel spreadsheets from the internet, Mac users will be able to install the

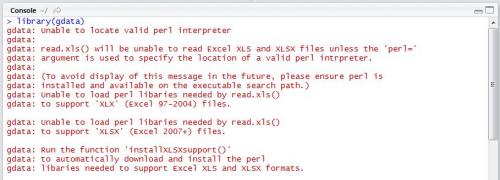

If you are a Windows user and attempt to attach thegdatalibrary immediately after installation, you will likely receive the warning message given in Figure 1. You will not be able to download excel spreadsheets from the internet without installing some additional software.

gdata without Perl

Unfortunately, it’s not as straightforward to fix as the error message would indicate. RunninginstallXLSXsupport()won’t completely solve your problem without Perl software installed. In order for gdata to function properly, you must install ActiveState Perl using the following link: http://www.activestate.com/activeperl/. The download could take up to 10 minutes or so, and when finished, you will need to find where the software was stored on your machine (likely directly on the C:/ Drive).

Once Perl software has been installed, you will need to direct R to find it each time you call the function.

Another common form of file storage is using zip files. For instance, the(BLS) stores theirfor thein .zip files. We can usedownload.file()to download the file to your working directory and then work with this data as desired.

Simple Scraper chrome extension. Что такое парсинг?

Парсинг — набор технологий и приемов для сбора общедоступных данных и хранения их в структурированном формате. Данные могут быть представлены множеством способов, таких как: текст, ссылки, содержимое ячеек в таблицах и так далее.

Чаще всего парсинг используется для мониторинга рыночных цен, предложений конкурентов, событий в новостных лентах, а также для составления базы данных потенциальных клиентов.

Выбор инструмента будет зависеть от множества факторов, но в первую очередь от объема добываемой информации и сложности противодействия защитным механизмам. Но всегда ли есть возможность или необходимость в привлечении специалистов? Всегда ли на сайтах встречается защита от парсинга? Может быть в каких-то случаях можно справиться самостоятельно?

Тогда что может быть сподручнее, чем всем привычный Google Chrome? !

Расширения для браузера — это хороший инструмент, если требуется собрать относительно небольшой набор данных. К тому же это рабочий способ протестировать сложность, доступность и осуществимость сбора нужных данных самостоятельно. Всё что потребуется — скачать понравившееся расширение и выбрать формат для накопления данных. Как правило это CSV (comma separated values — текстовый файл, где однотипные фрагменты разделены выбранным символом-разделителем, обычно запятой, отсюда и название) или привычные таблички Excel.

Ниже представлено сравнение десяти самых популярных расширений для Chrome.

Забегая вперед:

- все платные расширения имеют некоторый бесплатный период для ознакомления;

- только три — Instant Data Scraper, Spider и Scraper — полностью бесплатны;

- все платные инструменты (кроме Data Miner) имеют API (Application Program Interface — программный интерфейс, который позволяет настроить совместную работу с другими программами) .

Bot to extract Data From website. Web Data Scraper

Bypassing the Limits Solve captchas, use proxy to change IP, save login information… More info…

Bypassing the Limits ×

If the intended site has a security code (Captcha), the bot can solve the captcha like a human (although solving the captcha is not free and costs little).

If the intended site allows receiving a limited amount of information for each account, the bot can login with different accounts and automatically switching between accounts to increase the amount of extracted information.

If the intended site allows you to receive a limited amount of information for each IP, the bot can use the proxies you provide it with and automatically change your system IP.

7-Days Money Back Guarantee Refund in case of non-performance More info…

Easy to use

See how it works

×

Web Data Scraper Bot Package Package video text

Web Data Scraper Bot Package Package of the Virtual User Software , contains some Bot and each of

these Bot can extract information from a specific website.

I should mention that our software can solve Captcha codes; it can change the IP of

your system using proxy servers, and it has lots of other features that can be

useful for data scraping.

In this video, as you see, the Bot is downloading the images from the unsplash

website, based on their categories and titles, In the end, the extracted information

will be saved in the excel file.

If there are files to be downloaded, they will be saved in specific folders.

The target website for which the Bot has been developed, are listed on our website

Important Question

How fast is the Web Data Scraper bot? important Due to its optimized and advanced algorithms, our Bot is very fast in performing its actions but performing tasks depends on some other factors as well, factors such as the speed of the system, the speed of the internet connection, the load time of the web pages, and most importantly, the limitations of the target website, have more significant effects on the speed of the whole process. For example, imagine there is a website that limit the amount of the data that can be extracted in a single session, to overcome this limitation, our Bot has to close the browser (clear its cache) and open it again to extract all the required data, which slows down the whole process. These kinds of limitations, and the ones that are in the same category, play the main role in determining the operations speed. All considered, the bot is very fast in extracting data from different websites. Which is the most popular bot in this package? important The Google Map Scraper Bot is one of the most useful and popular bots that exist inside the Web Data Scraper Bot Package. What information does the Google Map Scarper Bot provide us with? important This bot searches for your intended keyword in the Google Maps and automatically extracts the information, such as the address, phone number, website address, etc. of the places registered in the Google Maps for that keyword.

Site parser. По используемому фреймворку

Если задачи, стоящие при сборе данных нестандартные, нужно выстроить подходящую архитектуру, работать с множеством потоков, и существующие решения вас не устраивают, нужно писать свой собственный парсер. Для этого нужны ресурсы, программисты, сервера и специальный инструментарий, облегчающий написание и интеграцию парсинг программы, ну и конечно поддержка (потребуется регулярная поддержка, если изменится источник данных, нужно будет поменять код). Рассмотрим какие библиотеки существуют в настоящее время. В этом разделе не будем оценивать достоинства и недостатки решений, т.к. выбор может быть обусловлен характеристиками текущего программного обеспечения и другими особенностями окружения, что для одних будет достоинством для других – недостатком.

Парсинг сайтов Python

Библиотеки для парсинга сайтов на Python предоставляют возможность создания быстрых и эффективных программ, с последующей интеграцией по API. Важной особенностью является, что представленные ниже фреймворки имеют открытый исходный код.– наиболее распространенный фреймворк, имеет большое сообщество и подробную документацию, хорошо структурирован.Лицензия: BSD– предназначен для анализа HTML и XML документов, имеет документацию на русском, особенности – быстрый, автоматически распознает кодировки.Лицензия: Creative Commons, Attribution-ShareAlike 2.0 Generic (CC BY-SA 2.0)– мощный и быстрый, поддерживает Javascript, нет встроенной поддержки прокси.Лицензия: Apache License, Version 2.0– особенность – асинхронный, позволяет писать парсеры с большим количеством сетевых потоков, есть документация на русском, работает по API.Лицензия: MIT License– простая и быстрая при анализе больших документов библиотека, позволяет работать с XML и HTML документами, преобразовывает исходную информацию в типы данных Python, хорошо документирована. Совместима с BeautifulSoup, в этом случае последняя использует Lxml как парсер.Лицензия: BSD– инструментарий для автоматизации браузеров, включает ряд библиотек для развертывания, управления браузерами, возможность записывать и воспроизводить действия пользователя. Предоставляет возможность писать сценарии на различных языках, Java, C#, JavaScript, Ruby.Лицензия: Apache License, Version 2.0JavaScript также предлагает готовые фреймворки для создания парсеров с удобными API.— это headless Chrome API для NodeJS программистов, которые хотят детально контролировать свою работу, когда работают над парсингом. Как инструмент с открытым исходным кодом, Puppeteer можно использовать бесплатно. Он активно разрабатывается и поддерживается самой командой Google Chrome. Он имеет хорошо продуманный API и автоматически устанавливает совместимый двоичный файл Chromium в процессе установки, а это означает, что вам не нужно самостоятельно отслеживать версии браузера. Хотя это гораздо больше, чем просто библиотека для парсинга сайтов, она очень часто используется для парсинга данных, для отображения которых требуется JavaScript, она обрабатывает скрипты, таблицы стилей и шрифты, как настоящий браузер. Обратите внимание, что хотя это отличное решение для сайтов, которым для отображения данных требуется javascript, этот инструмент требует значительных ресурсов процессора и памяти.Лицензия: Apache License, Version 2.0– быстрый, анализирует разметку страницы и предлагает функции для обработки полученных данных. Работает с HTML, имеет API устроенное так же, как API jQuery.Лицензия: MIT License– является библиотекой Node.js, позволяет работать с JSON, JSONL, CSV, XML,XLSX или HTML, CSS. Работает с прокси.Лицензия: Apache License, Version 2.0– написан на Node.js, ищет и загружает AJAX, поддерживает селекторы CSS 3.0 и XPath 1.0, логирует URL, заполняет формы.Лицензия: MIT LicenseJava также предлагает различные библиотеки, которые можно применять для парсинга сайтов.– библиотека предлагает легкий headless браузер (без графического интерфейса) для парсинга и автоматизации. Позволяет взаимодействовать с REST API или веб приложениями (JSON, HTML, XHTML, XML). Заполняет формы, скачивает файлы, работает с табличными данными, поддерживает Regex.Лицензия: Apache License (Срок действия программного обеспечения истекает ежемесячно, после чего должна быть загружена самая последняя версия)– библиотека для работы с HTML, предоставляет удобный API для получения URL-адресов, извлечения и обработки данных с использованием методов HTML5 DOM и селекторов CSS. Поддерживает прокси. Не поддерживает XPath.Лицензия: MIT License– не является универсальной средой для модульного тестирования, это браузер без графического интерфейса. Моделирует HTML страницы и предоставляет API, который позволяет вызывать страницы, заполнять формы, кликать ссылки. Поддерживает JavaScript и парсинг на основе XPath.Лицензия: Apache License, Version 2.0– простой парсер, позволяет анализировать HTML документы и обрабатывать с помощью XPath.Best website Scraper. 10 Best Web Scraping Tools for Digital Marketers

Data extraction and structurization is a commonly used process for marketers. However, it also requires a great amount of time and effort, and after a few days, the data can change, and all that amount of work will be irrelevant. That’s where web scraping tools come into play.

If you start googling web scraping tools, you will find hundreds of solutions: free and paid options, API and visual web scraping tools, desktop and cloud-based options; for SEO, price scraping, and many more. Such variety can be quite confusing.

We made this guide for the best web scraping tools to help you find what fits your needs best so that you can easily scrape information from any website for your marketing needs.

Quick Links

What Does a Web Scraper Do?

A web scraping tool is software that simplifies the process of data extraction from websites or advertising campaigns. Web scrapers use bots to extract structured data and content: first, they extract the underlying HTML code and then store data in a structured database as a CSC file, an Excel spreadsheet, SQL database, and other formats.

You can use web scraping tools in many ways; for example:

- Perform keyword and PPC research.

- Analyze your competitors for SEO purposes.

- Collect competitors’ prices and special offers.

- Crawl social trends (mentions and hashtags).

- Extract emails from online business directories, for example, Yelp.

- Collect companies’ information.

- Scrape retailer websites for the best prices and discounts.

- Scrape jobs postings.

There are dozens of other ways of implementing web scraping features, but let’s focus on how marketers can profit from automated data collection.

Web Scraping for Marketers

Web scraping can supercharge your marketing tactics in many ways, from finding leads to analyzing how people react to your brand on social media. Here are some ideas on how you can use these tools.

Web scraping for lead generation

If you need to extend your lead portfolio, you may want to contact people who fit your customer profile. For example, if you sell software for real estate agents, you need those agents’ email addresses and phone numbers. Of course, you can browse websites and collect their details manually, or you can save time and scrape them with a tool.

A web scraper can automatically collect the information you need: name, phone number, website, email, location, city, zip code, etc. We recommend starting scraping with Yelp and Yellowpages.

Now, you can build your email and phone lists to contact your prospects.

Web scraping for market research

With web scraping tools, you can scrape valuable data about your industry or market.For example, you can scrape data from marketplaces such as Amazon and collect valuable information, including product and delivery details, pricing, review scores, and more.

Using this data, you can generate insights into positioning and advertising your products effectively.

For example, if you sell smartphones, scrape data from a smartphone reseller catalog to develop your pricing, shipment conditions, etc. Additionally, by analyzing consumers’ reviews, you can understand how to position your products and your business in general.

Web scraping for competitor research

You may browse through your competitors’ websites and gather information manually, but what if there are dozens of them that each have hundreds or thousands of web pages? Web scraping will save you a lot of time, and with regular scraping, you will always be up-to-date.

You can regularly scrape entire websites, including product catalogs, pricing, reviews, blog posts, and more, to make sure you are riding the wave.

Web scraping can be incredibly useful for PPC marketers to get an insight into competitors’ advertising activities. You can scrape competitors’ Search, Image, Display, and HTML ads. You’ll get all of the URLs, headlines, texts, images, country, popularity, and more in just a few minutes.

Web scraping for knowing your audience

Knowing what your audience thinks and what they talk about is priceless. That’s how you can understand their issues, values, and desires to create new ideas and develop existing products.

Web scraping tools can help here too. For example, you can scrape trending topics, hashtags, location, and personal profiles of your followers to get more information about your ideal customer personas, including their interests and what they care and talk about. You may also create a profile network to market to specific audience segments.

Web scraping for SEO

Web scraping is widely used for SEO purposes. Here are some ideas about what you can do:

- Analyze robots.txt и sitemap.xml.