Best 10 free and Paid Web Scraping Tools. 2022 Top 10 Best Web Scraping Tools for Data Extraction | Web Scraping Tool | ScrapeStorm

- Best 10 free and Paid Web Scraping Tools. 2022 Top 10 Best Web Scraping Tools for Data Extraction | Web Scraping Tool | ScrapeStorm

- How to scrape Data from any website for free. 16 Tools to extract Data from website

- Best Web scraper chrome extension. Что такое парсинг?

- Scrapy. Introducing Scrapy

- Easy Web Scraping Tools. The Essential Guide To Web Scraping Tools

- Web Scraping ai Tools. or how to become a Web Scraping ninja

- Best Web Scraping Tools. Apify

Best 10 free and Paid Web Scraping Tools. 2022 Top 10 Best Web Scraping Tools for Data Extraction | Web Scraping Tool | ScrapeStorm

14594 views

Abstract: This article will introduce the top10 best web scraping tools in 2019. They are ScrapeStorm, ScrapingHub, Import.io, Dexi.io, Diffbot, Mozenda, Parsehub, Webhose.io, Webharvy, Outwit. ScrapeStorm Free Download

Web scraping tools are designed to grab the information needed on the website. Such tools can save a lot of time for data extraction.

Here is a list of 10 recommended tools with better functionality and effectiveness.

1. ScrapeStorm

ScrapeStorm is an AI-Powered visual web scraping tool,which can be used to extract data from almost any websites without writing any code.

It is powerful and very easy to use. You only need to enter the URLs, it can intelligently identify the content and next page button, no complicated configuration, one-click scraping.

ScrapeStorm is a desktop app available for Windows, Mac, and Linux users. You can download the results in various formats including Excel, HTML, Txt and CSV. Moreover, you can export data to databases and websites.

Features:

1) Intelligent identification

2) IP Rotation and Verification Code Identification

3) Data Processing and Deduplication

4) File Download

5) Scheduled task

6) Automatic Export

8) Automatic Identification of E-commerce SKU and big images

Pros:

1) Easy to use

2) Fair price

3) Visual point and click operation

4) All systems supported

Cons:

No cloud services

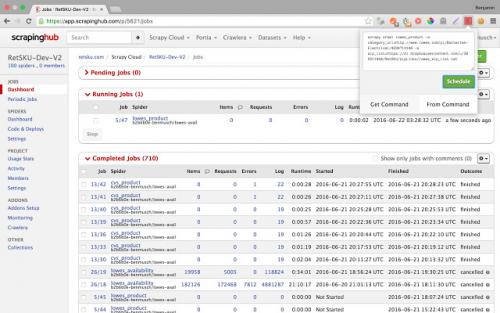

2.ScrapingHub

Scrapinghub is the developer-focused web scraping platform to offer several useful services to extract structured information from the Internet.

Scrapinghub has four major tools – Scrapy Cloud, Portia, Crawlera, and Splash.

Features:

1) Allows you to converts the entire web page into organized content

2) JS on-page support toggle

3) Handling Captchas

Pros:

1) Offer a collection of IP addresses covered more than 50 countries which is a solution for IP ban problems

2) The temporal charts were very useful

3) Handling login forms

4) The free plan retains extracted data in cloud for 7 days

Cons:

1) No Refunds

2) Not easy to use and needs to add many extensive add-ons

3) Can not process heavy sets of data

3.Import.io

Import.io is a platform which facilitates the conversion of semi-structured information in web pages into structured data, which can be used for anything from driving business decisions to integration with apps and other platforms.

They offer real-time data retrieval through their JSON REST-based and streaming APIs, and integration with many common programming languages and data analysis tools.

Features:

1) Point-and-click training

2) Automate web interaction and workflows

3) Easy Schedule data extraction

Pros:

1) Support almost every system

2) Nice clean interface and simple dashboard

3) No coding required

Cons:

1) Overpriced

2) Each sub-page costs credit

4.Dexi.io

Web Scraping & intelligent automation tool for professionals. Dexi.io is the most developed web scraping tool which enables businesses to extract and transform data from any web source through with leading automation and intelligent mining technology.

Dexi.io allows you to scrape or interact with data from any website with human precision. Advanced feature and APIs helps you transform and combine data into powerfull datasets or solutions.

Features:

1) Provide several integrations out of the box

2) Automatically de-duplicate data before sending it to your own systems.

How to scrape Data from any website for free. 16 Tools to extract Data from website

In today's business world, smart data-driven decisions are the number one priority. For this reason, companies track, monitor, and record information 24/7. The good news is there is plenty of public data on servers that can help businesses stay competitive.

The process of extracting data from web pages manually can be tiring, time-consuming, error-prone, and sometimes even impossible. That is why most web data analysis efforts use automated tools.

Web scraping is an automated method of collecting data from web pages. Data is extracted from web pages using software called web scrapers, which are basically web bots.

What is data extraction, and how does it work?

Data extraction or web scraping pursues a task to extract information from a source, process, and filter it to be later used for strategy building and decision-making. It may be part of digital marketing efforts, data science, and data analytics. The extracted data goes through the ETL process (extract, transform, load) and is then used for business intelligence (BI). This field is complicated, multi-layered, and informative. Everything starts with web scraping and the tactics on how it is extracted effectively.

Before automation tools, data extraction was performed at the code level, but it was not practical for day-to-day data scraping. Today, there are no-code or low-code robust data extraction tools that make the whole process significantly easier.

What are the use cases for data extraction?

To help data extraction meet business objectives, the extracted data needs to be used for a given purpose. The common use cases for web scraping may include but are not limited to:

- Online price monitoring: to dynamically change pricing and stay competitive.

- Real estate: data for building real-estate listings.

- News aggregation: as an alternative data for finance/hedge funds.

- Social media: scraping to get insights and metrics for social media strategy.

- Review aggregation: scraping gathers reviews from predefined brand and reputation management sources.

- Lead generation: the list of target websites is scraped to collect contact information.

- Search engine results: to support SEO strategy and monitor SERP.

Is it legal to extract data from websites?

Web scraping has become the primary method for typical data collection, but is it legal to use the data? There is no definite answer and strict regulation, but data extraction may be considered illegal if you use non-public information. Every tip described below targets publicly available data which is legal to extract. However, it is still illegal is to use the scrapped data for commercial purposes.

How to extract data from a website

Manually extracting data from a website (copy/pasting information to a spreadsheet) is time-consuming and difficult when dealing with big data. If the company has in-house developers, it is possible to build a web scraping pipeline. There are several ways of manual web scraping.

1. Code a web scraper with Python

It is possible to quickly build software with any general-purpose programming language like Java, JavaScript, PHP, C, C#, and so on. Nevertheless, Python is the top choice because of its simplicity and availability of libraries for developing a web scraper.

2. Use a data service

Data service is a professional web service providing research and data extraction according to business requirements. Similar services may be a good option if there is a budget for data extraction.

3. Use Excel for data extraction

This method may surprise you, but Microsoft Excel software can be a useful tool for data manipulation. With web scraping, you can easily get information saved in an excel sheet. The only problem is that this method can be used for extracting tables only.

4. Web scraping tools

Modern data extraction tools are the top robust no-code/low code solutions to support business processes. With three types of data extraction tools – batch processing, open-source, and cloud-based tools – you can create a cycle of web scraping and data analysis. So, let's review the best tools available on the market.

Best Web scraper chrome extension. Что такое парсинг?

Парсинг — набор технологий и приемов для сбора общедоступных данных и хранения их в структурированном формате. Данные могут быть представлены множеством способов, таких как: текст, ссылки, содержимое ячеек в таблицах и так далее.

Чаще всего парсинг используется для мониторинга рыночных цен, предложений конкурентов, событий в новостных лентах, а также для составления базы данных потенциальных клиентов.

Выбор инструмента будет зависеть от множества факторов, но в первую очередь от объема добываемой информации и сложности противодействия защитным механизмам. Но всегда ли есть возможность или необходимость в привлечении специалистов? Всегда ли на сайтах встречается защита от парсинга? Может быть в каких-то случаях можно справиться самостоятельно?

Тогда что может быть сподручнее, чем всем привычный Google Chrome? !

Расширения для браузера — это хороший инструмент, если требуется собрать относительно небольшой набор данных. К тому же это рабочий способ протестировать сложность, доступность и осуществимость сбора нужных данных самостоятельно. Всё что потребуется — скачать понравившееся расширение и выбрать формат для накопления данных. Как правило это CSV (comma separated values — текстовый файл, где однотипные фрагменты разделены выбранным символом-разделителем, обычно запятой, отсюда и название) или привычные таблички Excel.

Ниже представлено сравнение десяти самых популярных расширений для Chrome.

Забегая вперед:

- все платные расширения имеют некоторый бесплатный период для ознакомления;

- только три — Instant Data Scraper, Spider и Scraper — полностью бесплатны;

- все платные инструменты (кроме Data Miner) имеют API (Application Program Interface — программный интерфейс, который позволяет настроить совместную работу с другими программами) .

Scrapy. Introducing Scrapy

A framework is a reusable, “semi-complete” application that can be specialized to produce custom applications. (Source: Johnson & Foote, 1988 )

In other words, the Scrapy framework provides a set of Python scripts that contain most of the code required to use Python for web scraping. We need only to add the last bit of code required to tell Python what pages to visit, what information to extract from those pages, and what to do with it. Scrapy also comes with a set of scripts to setup a new project and to control the scrapers that we will create.

It also means that Scrapy doesn’t work on its own. It requires a working Python installation (Python 2.7 and higher or 3.4 and higher - it should work in both Python 2 and 3), and a series of libraries to work. If you haven’t installed Python or Scrapy on your machine, you can refer to the setup instructions . If you install Scrapy as suggested there, it should take care to install all required libraries as well.

scrapy version

in a shell. If all is good, you should get the following back (as of February 2017):

Scrapy 2.1.0

If you have a newer version, you should be fine as well.

Scrapy. Introducing Scrapy.

Scrapy is a powerful Python framework that provides a set of scripts that contain most of the code required to use Python for web scraping. With Scrapy, we only need to add the last bit of code required to tell Python what pages to visit, what information to extract from those pages, and what to do with it.

Scrapy also comes with a set of scripts to set up a new project and to control the scrapers that we will create. This means that Scrapy doesn't work on its own; it requires a working Python installation (Python 2.7 and higher or 3.4 and higher - it should work in both Python 2 and 3).

If you haven't installed Python or Scrapy on your machine, you can refer to the setup instructions. If you install Scrapy as suggested there, it should take care to install all required libraries as well.

Once you've installed Scrapy, you can verify that everything is working correctly by running a shell command. If all is good, you should get the following back (as of February 2017):

$ scrapy version 1.8.0

If you have a newer version, you should be fine as well.

To introduce the use of Scrapy, we will reuse the same example we used in the previous section. We will start by scraping a list of URLs from the list of faculty of the Psychological & Brain Sciences and then visit those URLs to scrape detailed information about those faculty members.

Easy Web Scraping Tools. The Essential Guide To Web Scraping Tools

James Phoenix

James Phoenix

Web scraping is an effective and scalable method for automatically collecting data from websites and webpages. In comparison, manually copying and pasting information from the internet is incredibly cumbersome, error-prone and slow.

As scraping data can be, there are now many web scraping tools to choose from, making it essential that you pick the right piece of software-based upon your specific use case and technical ability.

Choosing The Right Web Scraping Tool For The Job

Often web scraping browser extensions have fewer advanced features and are more suited to collecting specific information from fewer URLs, whereas open-source programming technologies are much more sophisticated, allowing you to scrape huge quantities of data efficiently and without interruption from servers.

Scraping isn’t illegal but many sites do not want robots crawling around them and scraping their data – a good web scraping application must be able to avoid detection .

Another important aspect of scraping is that it can be resource-intensive. Whilst smaller web scraping tools can be run effectively from within your browser, large suites of web scraping tools are more economical as standalone programs or web clients.

One step further still are full-service web scraping providers that provide advanced web scraping tools from dedicated cloud servers.

Web Scraper Browser Extensions

This type of web scraping tool acts as an extension forand, allowing you to control scraping tasks from within your browser as you search the internet. You can have the web scraper follow you as you search manually through some pages, essentially automatically copying and pasting data, or have it perform a more in-depth scrape of a set of URLs.

Web Scraper from webscraper.io is a Chrome extension, enabling you to scrape locally from the browser using your own system resources. It’s naturally limited in scope but it does allow you to construct a sitemap of pages to scrape using a drag-and-drop interface. You can then scrape and intelligently categorise information before downloading data as a CSV file.

| Benefits | Ideal For |

| Particularly good at scraping detailed information from limited web pages (e.g. a few product categories or blog posts). | Smaller web-scraping projects. |

| Conveniently executes from a Chrome browser. | Collecting ideas from blogs and content. |

| Totally free. | Scraping data from small shop inventories. |

This convenient browser extension scraper enables you to efficiently scrape a wide array of data from modern webpages and compile it into CSV and XSL files. Data is easily converted into clear well-structured tables and semi-manual scraping controls allow you to be selective about what data you scrape or ignore.

Recipes

Dataminer also comes bundled with pre-built scripts/tasks as a ‘recipe’, these are web scraping schematics developed by the community that instruct the scraper on what data to collect. Some of these include scraping data from e-commerce sites such as eBay, Amazon and Alibaba, or for social media, news sites, etc.

| Benefits | Ideal For |

| User-friendly browser extension. | Smaller commercial projects and startups. |

| ‘Recipes’ provide readymade scraping queries optimised for popular scraping tasks. | Those who need to scrape specific or niche data without coding knowledge. |

| Scalable cloud-server run services for bigger projects or businesses. | Anyone looking for a streamlined scraper for collecting data from popular sites. |

Google Chrome Developer Tools

Pricing: Free

Google chrome has an in-built developer tools section, making it easy to inspect source code. I find this tool incredibly useful, espeically when you’re looking to locate certain html elements and would like to get either:

- The XPath selector.

- The CSS selector.

1. The copy functionality

Example of an XPath query:

//*/div/div/div/div

Alternatively we can copy a CSS selector.

Example of a CSS selector query:

#root > div > article > div > section:nth-child(4) > div

2. The $x command

A useful command in Google Chrome Developer tools is the $x command which will execute XPATH queries within the console section of the browser. It’s a great way for you to quickly test and refine your XPATH queries before using them within your code.

Also, before even pressing enter, $x will pre-fetch the matched elements so that you can easily refine and get the perfect query.

3. Listening and copying network requests

Whilst web scraping you will often have to replicate complex network requests which may need to include specific:

- Headers.

So even if the content loads after clicking a button or scrolling on a page you can easily record and replay these events via Google developers tools.

Web Scraping ai Tools. or how to become a Web Scraping ninja

In reality, this means our team has to collect data on browsing patterns and train the model to generate plausible combinations of browsers, OSs, devices, and other attributes collected when fingerprinting that guarantee bypassing anti-scraping measures. They do this by first collecting the known “passing” fingerprints into a database, then labeling them by category and feeding the data to the AI model to steer its learning. Ideally, the AI model should provide fingerprints that are random but still human enough not to be discarded by the website. Monitoring success rates per fingerprint and creating a feedback loop can also help the AI model improve with time.

As you can see, generating realistic web fingerprints is not exactly your web scraping crash course. ML-based anti-bot algorithms gave rise to AI-powered dynamic fingerprinting, machine against machine. But these days it would be quite hard to scrape at scale and stay on top of the data extraction business without such technology and strategy behind it.

We’ve covered 3 web scraping powered by AI projects here: generating web fingerprints for combating anti-scraping measures, identifying CSS selectors for quick scraper repairs, and product mapping for competitor analysis. Hopefully, we’ve managed to shine some light on this complicated combo and the machines will spare our lives when they will inevitably go to war against each other and, ultimately, humanity. Cheers!

Best Web Scraping Tools. Apify

Apify is a well-known provider of web scraping tools and offers a wide range of pre-built web scrapers, with most of them dedicated to specific use cases and purposes. Apify comes with a great browser extension and allows connecting to proxies using an API. Apify has a great reputation and is working with some of the biggest companies in the world, including Microsoft, Samsung, Decathlon, and more.

You can use their amazing Node.js library, Crawlee, to empower your web scrapers and give them an almost unfair advantage. You can also libraries you are used to, including Puppeteer, Scrapy, Selenium, or Playwright. Apify has the richest GitHub we’ve seen, so kudos to them for that.

RAM memory scales, and starts from 4GB on the free plan, which is already enough for a small scraping project. You also get a high number of team seats, so you can invite your colleagues to the same account. This is one of the only providers that lets you get full Discord support on the free plan, and all the paid plans come already with chat support!

Their prices are great, for $49 you can scrape around 12K pages including JS rendering! If you want to scrape simple HTML pages, you’ll get 55K requests, which is pretty amazing. You can buy add-ons like shared DC proxies (not recommended, high blocking rate), increase max memory, and increase the number of seats.

Prices range from free to $499/mo, with custom enterprise plans available too. In conclusion, Apify is one of the best choices out there, especially if you have a particular use case.